|

I'm a "third-year" CS PhD candidate at the University of Texas at Dallas (UTD), advised by Dr. Yunhui Guo. I'm a part of the Data Efficient Intelligent Learning Lab. Before this, I obtained my MS in Electrical Engineering from the University of Southern California (USC) and a Bachelor's degree from IIIT Bhubaneswar (IIIT-Bh), India, with an honors degree in Electrical and Electronics Engineering. Here are some of the key directions driving my research:

During my Masters, I closely worked with Dr. Yonggang Shi. Previously, I had also worked with Dr. Shri Narayanan. As an undergraduate, I was fortunate enough to work with Dr. Ren Hongliang (NUS), Dr. Prasanta Kumar Ghosh (IISc), and Dr. Aurobinda Routray (IIT-Kharagpur). I have published at top-tier ML/computer vision/signal processing conferences such as ICCV, NeurIPS(3x), AAAI, ECCV, and ICASSP(2x). I'm happy to chat and discuss potential collaborations. Feel free to contact me. Email / CV / Google Scholar / GitHub / LinkedIn |

|

|

Oct '25 |

Gave a talk at the MCL workshop @ ICCV 2025. |

|

Sep '25 |

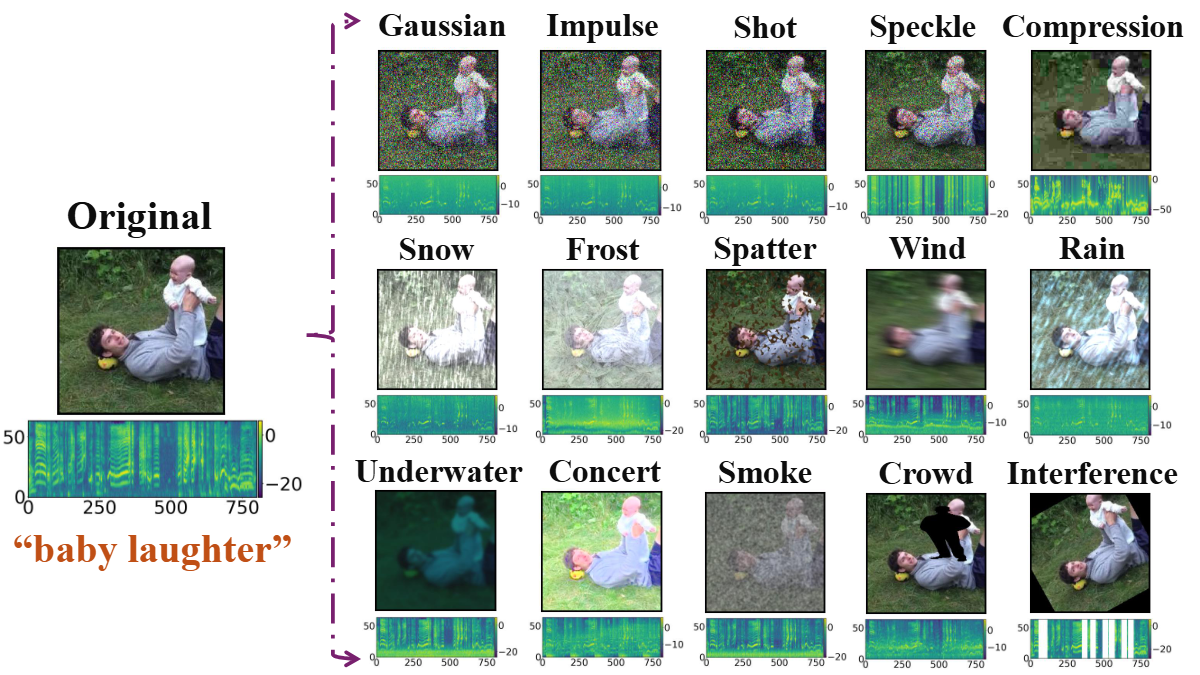

AVROBUSTBENCH has been accepted to NeurIPS (D&B) 2025! See you in San Diego, CA! 🏖️🌮 |

|

Aug '25 |

Received a travel grant from the ICCV community! |

|

June '25 |

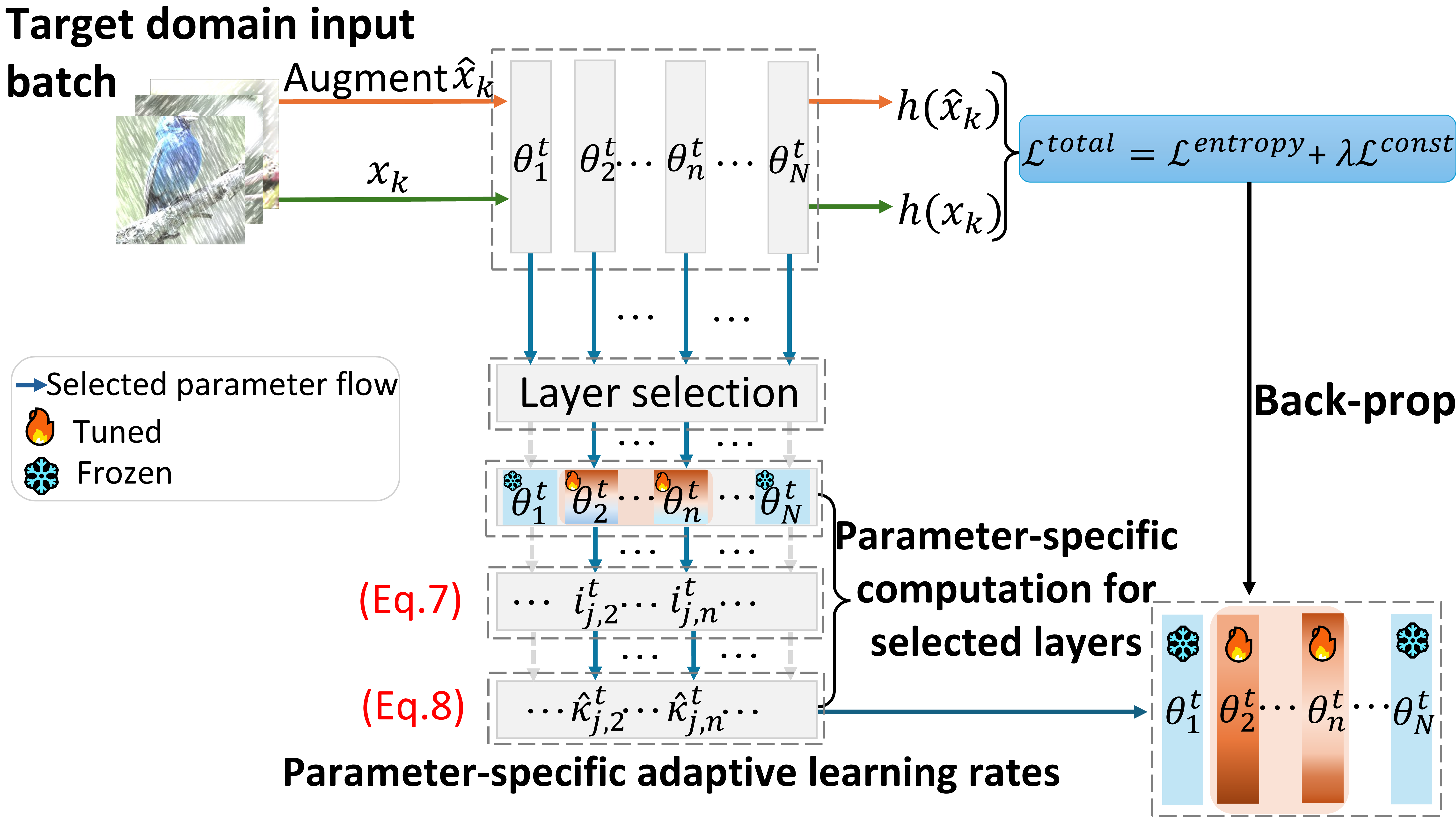

BATCLIP has been accepted to ICCV 2025. See you in the gorgeous Hawai'i! 🌴 |

|

May '25 |

We're organizing the 1st Workshop on Multimodal Continual Learning at ICCV 2025! |

|

May '25 |

Crushed my quals — officially a PhD candidate now! |

|

Mar '25 |

Excited to be co-organizing the 2nd Workshop on Test-Time Adaptation: Putting Updates to the Test at ICML 2025! |

|

Feb '25 |

This summer, I'll be joining Dolby Laboratories as a PhD Research Intern! |

|

Dec '24 |

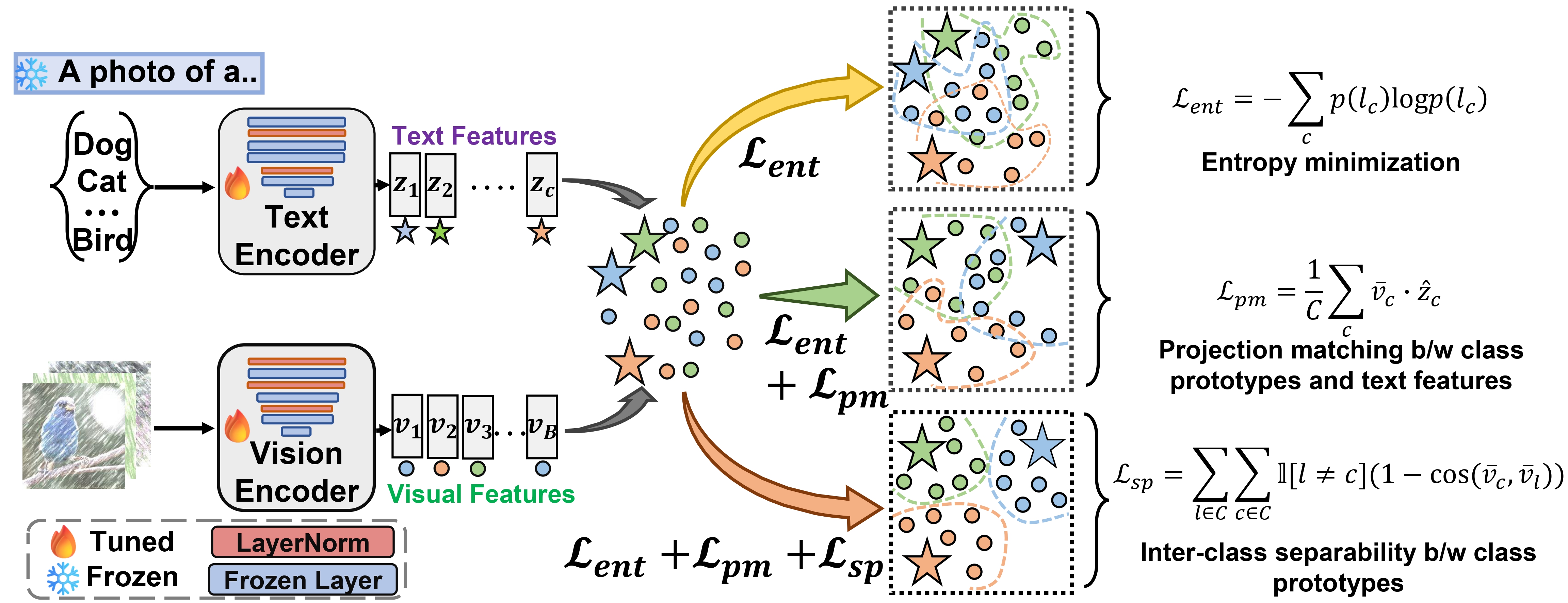

PALM has been accepted to AAAI 2025 for an Oral presentation! |

|

Nov '24 |

Serving as a CVPR 2025 reviewer. |

|

Oct '24 |

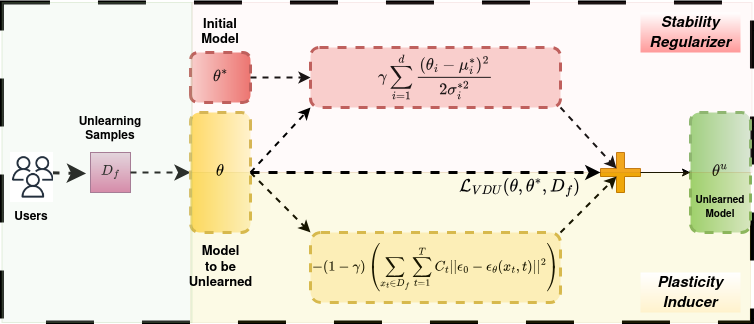

Variational Diffusion Unlearning (VDU) is accepted to the NeurIPS SafeGenAI workshop 2024! |

|

Sep '24 |

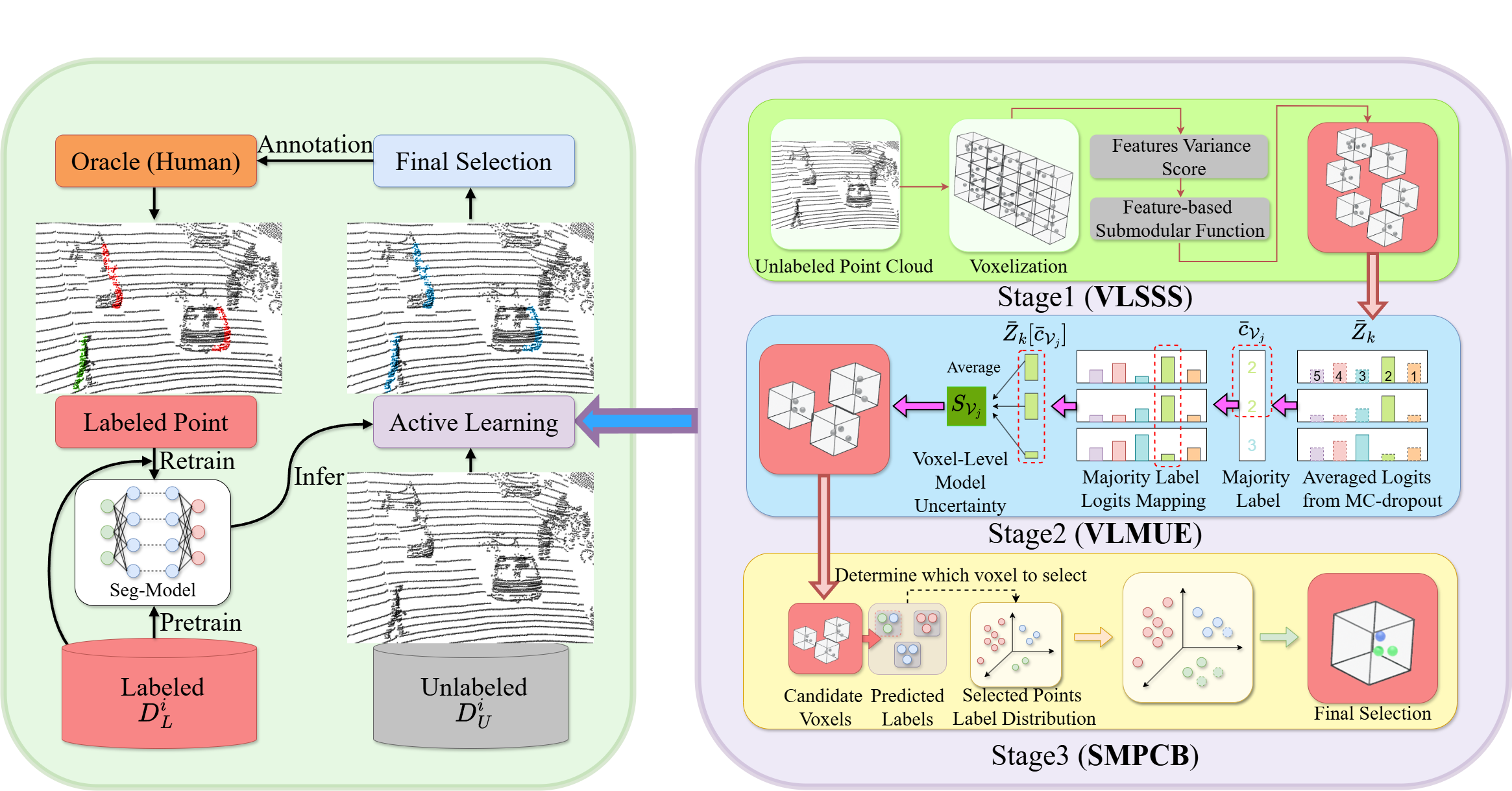

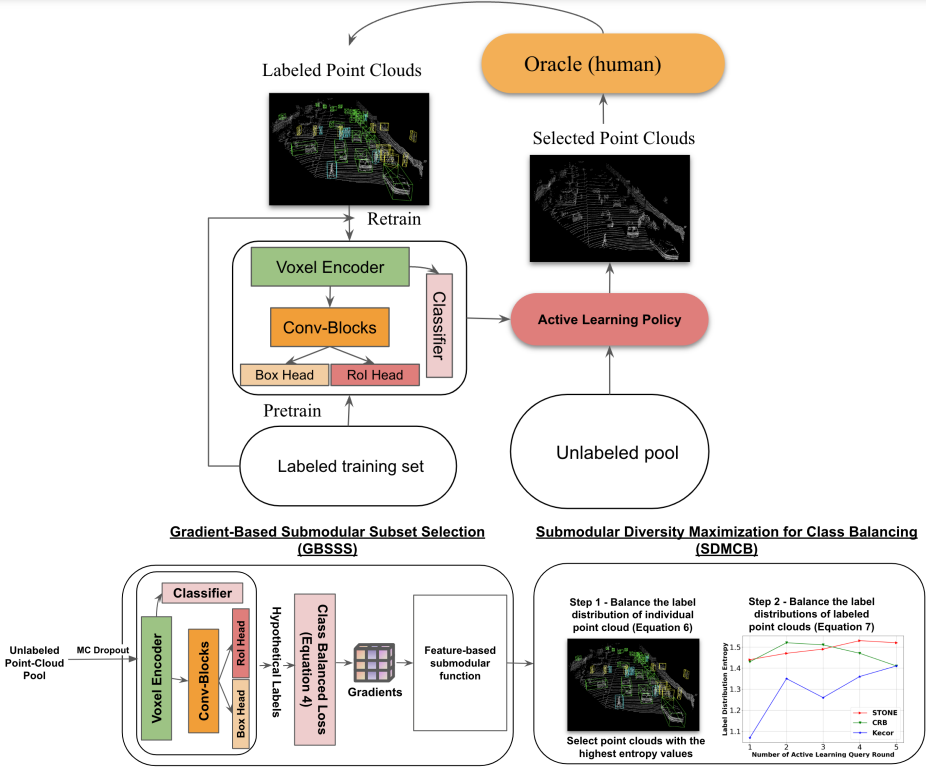

Our paper on submodular optimization for active 3D object detection has been accepted to NeurIPS 2024! |

|

Aug '24 |

Serving as a reviewer for ICLR 2025. |

|

Jul '24 |

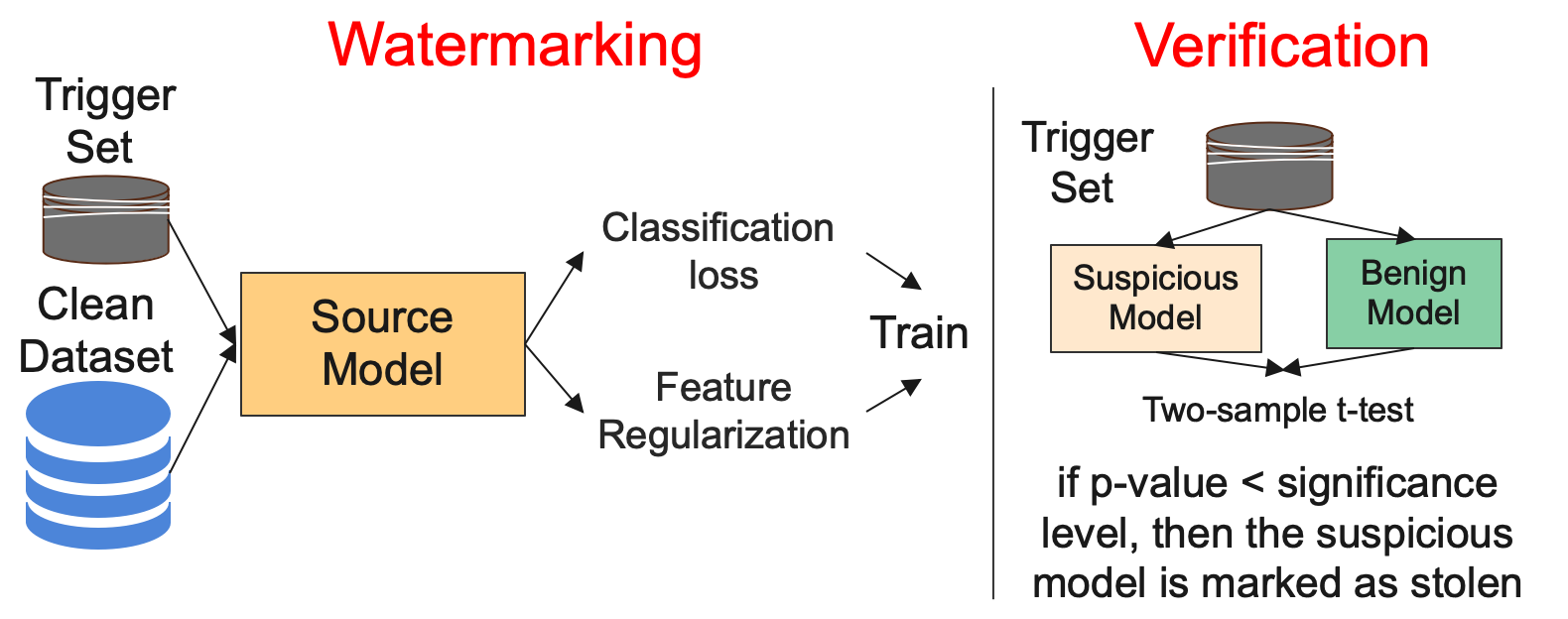

Our paper on DNN watermarking has been accepted to ECCV 2024! |

|

May '24 |

Serving as a reviewer for BMVC 2024. |

|

Mar '24 |

Serving as a reviewer for CVPR 2024 Workshop on Test-Time Adaptation: Model, Adapt Thyself! (MAT). |

|

Feb '24 |

Serving as a reviewer for ECCV 2024. |

|

Jan '24 |

Our paper on SSL features for dysarthric speech has been accepted to the SASB workshop @ ICASSP 2024! |

|

Jan '24 |

I am glad to be selected to attend the MLx Representation Learning and Generative AI Oxford Summer School. |

|

Source code by Jon Barron. |