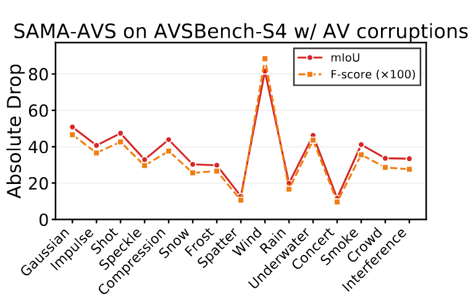

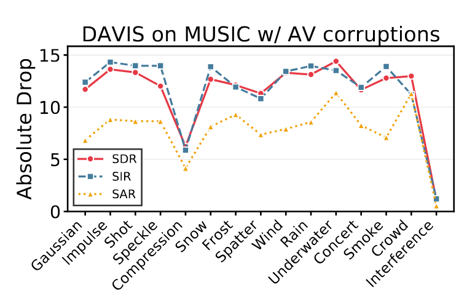

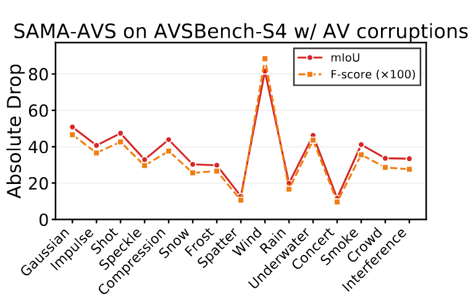

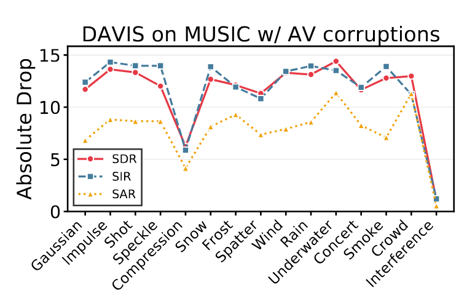

➤ Emulating real-world settings, we release realistic, co-occurring, and correlated audio-visual corruptions at test-time. We propose 15 bimodal audio-visual corruptions each of 5 severity levels, categorized into Digital, Environmental, and Human-Related corruptions. Our proposed can be easily extended to any audio/speech-visual test sets.

➤ We also release four benchmark datasets: AUDIOSET-2C, VGGSOUND-2C, KINETICS-2C, and EPICKITCHENS-2C, each containing 75 bimodal audio-visual corruptions applied on their respective source test sets.

➤ We propose a simple and effective online test-time adaptation (TTA) method, AV2C, to overcome bimodal distributional shifts. AV2C leverages cross-modal fusion by penalizing high-entropy samples, enabling models to adapt on-the-fly during test-time.

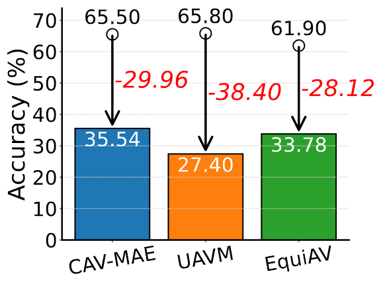

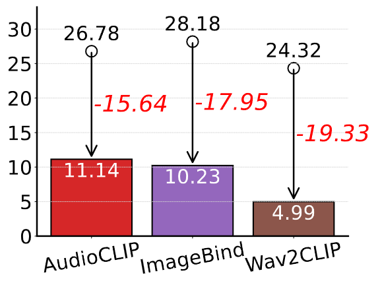

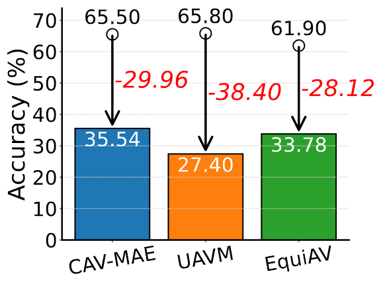

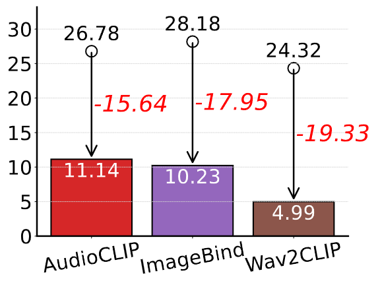

We observe a significant gap between clean accuracy and performance under our bimodal AV corruptions (severity 5). We report top-1 accuracy, absolute robustness, and relative robustness averaged across corruptions. Models include both supervised and self-supervised variants.

@inproceedings{maharana2025avrobustbench,

title={AVROBUSTBENCH: Benchmarking the Robustness of Audio-Visual Recognition Models at Test-Time},

author={Maharana, Sarthak Kumar and Kushwaha, Saksham Singh and Zhang, Baoming and Rodriguez, Adrian and Wei, Songtao and Tian, Yapeng and Guo, Yunhui},

booktitle={Advances in Neural Information Processing Systems},

year={2025}

}